If we build (or buy) the best data platform we can afford, the users will be clamoring to use it, right?

…and when they don’t, we’ll blame the software, switch platforms, and repeat the cycle. Or we’ll just grumble and stick with Excel.

When this happens, there’s understandable frustration from leadership and staff, disengagement, lost trust, missed opportunities, and a lot of wasted time and money.

Why implementations fail

Sure, some platforms are better than others. Some are better for specific purposes. Is it possible you chose the wrong software? Yes, of course. However, the reason for failure is usually not the platform itself. It often comes down to implementation, the people, and the culture.

Even the best software can fail to be adopted. Let’s look at some of the reasons why.

Unrealistic Expectations

Everyone wants an easy button for data analytics, but the truth is, even the best analytics software relies on your organization’s data and culture. This expectation of an “easy button” causes companies to abandon products, let them languish, or continually switch products in search of that elusive solution. (And some business intelligence vendors are marketing and profiting from this very expectation… tsk tsk.)

What contributes to unmet expectations?

- Number of source systems: The more applications or data inputs you have, the more complex and challenging it becomes to establish and support your data ecosystem.

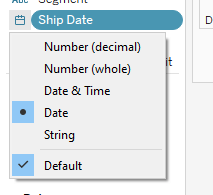

- Data warehousing: A well-structured data warehouse, data strategy, and supporting toolset improve the durability and scalability of your BI implementation. This involves a technology stack and architecture that supports transferring data from source systems, loading data to your data warehouse, and transforming the data to suit reporting needs.

- Reporting Maturity: If you don’t have a good handle on historical reporting and business definitions, you won’t immediately jump into advanced analytics. A couple of side notes:

- Does AI solve this? Probably not. You still need a solid understanding of data quality, business rules, and metric definitions to get meaningful insights and interpret what’s presented. Worst case, you could get bad insights and not even realize it.

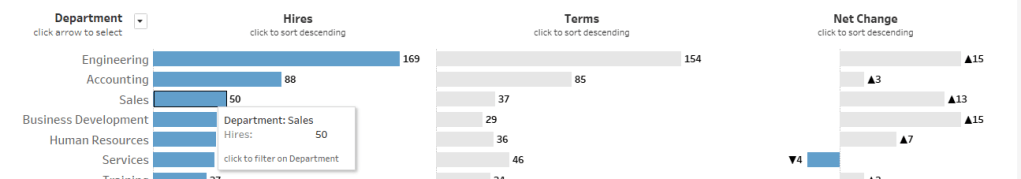

- If you currently create reports manually, someone is likely also doing manual data cleanup and review. Automation means you’ll need to address any sparse or unclean data and clarify any loosely defined logic. This can be time-consuming and catch business leaders off guard.

- Learning Curve: No matter how user-friendly a tool is, there’s always a learning curve.

- Analysts need time to learn the tool. If you’re implementing a new tool, analysts (or report creators) will need to learn it, which means initial rollout and adoption will be slower.

- General business users will need time to get comfortable with the new reports, which may have a different look, feel, and functionality.

- If you’ve had data quality issues (or no reporting) in the past, there can also be a lag in adoption while trust is established.

So, what happens when we’ve properly set expectations and understand what we’re getting into, but the product still doesn’t support the business needs? Let’s look at some other factors:

Implementation Choices

We tend to make choices based on what we know. It’s human nature. The modern data stack, however, changes how we can think about implementing a data warehouse.

A note on cost: Quality tools don’t necessarily have to be the most expensive, but be very cautious about over-indexing on cost or not considering long-term or at-scale costs.

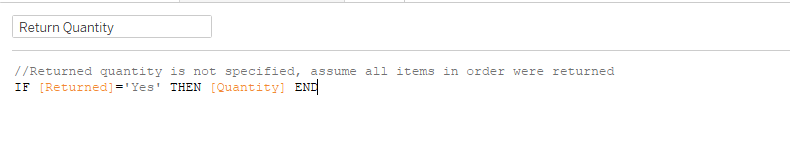

- ETL vs. ELT: With ETL (Extract, Transform, Load), we extract only the necessary data from the source system, transform it to fit specific purposes, and load the transformed data to the data warehouse. This means each new use case may require new data engineering efforts. With ELT (Extract, Load, Transform), the raw or near-raw data is stored in the data warehouse, allowing for more flexibility in how that data is used downstream. Because of this, modular and reusable transformations can significantly reduce the maintenance of deployed models and reduce the effort required for new data models.

- Availability and Usability: Decisions made due to lack of knowledge or in an attempt to control costs can sink your project.

- Governance & Security: This is a balancing act. Data security is a top concern for most companies. Governance is critical to a secure, scalable, and trusted business intelligence practice. But consider the scale and processes carefully. Excessive security or red tape will cause a lack of adoption, increased turnaround time, frustration, and eventually, abandonment. This abandonment may not always be immediately apparent—it’s nearly impossible to understand the scale and impact of “Shadow BI.”

People and Culture

Make sure everyone knows why:

- Change Management: Depending on the organization’s data literacy and analytics maturity, you could have a significant challenge to drive adoption.

- Trust and Quality: If you aren’t currently using analytics extensively, you may not realize how sparse, out-of-date, or disparate your data is. Be prepared to invest time in understanding data quality and improving data stewardship.

- Resistance: Change is hard. Some users resist new processes and automation. If leadership fails to communicate the reasons for the change or isn’t fully bought in, resistance can stifle adoption, lead to silos, and create a general mess.

- Change Fatigue: If staff have recently experienced a lot of change (including previous attempts at implementing new BI tools), they’ll be tired. It’s not always avoidable but may need to be handled with more patience and support.

Enablement and Support: Would you rather learn to swim in a warm pool with a lifeguard or be thrown off a boat into the cold ocean and told to swim to shore?

- Training: Many software companies offer free resources to get different types of users started. Beyond that, you can contract expert trainers or pay for e-learning resources. You may even have training resources already on your company learning platform. Please don’t skip this.

- Support: Do you have individuals or teams who can support users in identifying, understanding, and using data? Where can users go with questions or issues? This is likely a combination of resources like product documentation and forums, an internal expert for environment-specific questions, and peer-to-peer support.

- Community: Connect new users by creating an internal data community. No one is alone in this, so help your users help each other. Your community (or CoE, or CoP) can be large, but don’t underestimate the value of something as simple as a Slack channel for knowledge sharing, peer-to-peer support, and organic connection.

- Resources: Make sure people know what resources exist, have the information they need readily available, and know how to get help. You didn’t create all these resources and documentation for them to sit unused.

How to increase your chances of success

- Invest in a well-planned foundation.

- Prioritize user enablement and user needs.

- Champion effective change management.

- Foster a data-driven culture: Promote data literacy, celebrate successes, and reward data-driven decision-making.

Because, even the best software can fail to be adopted.

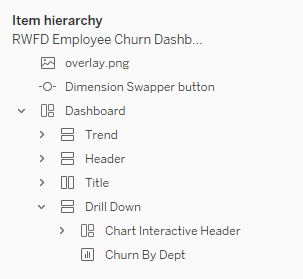

One of the reasons I love Tableau is that they’ve long recognized the role of the many factors and decisions that lead to successful implementation and created the Tableau Blueprint. This is an amazing resource to guide organizations, their tech teams, and their users through many of the considerations, options, and steps to ensure success. It’s very thorough and definitely worth a read.

Happy Vizzing!